Install the app

Install this application on your home screen for quick and easy access when you’re on the go.

Just tap then “Add to Home Screen”

Install this application on your home screen for quick and easy access when you’re on the go.

Just tap then “Add to Home Screen”

Install this application on your home screen for quick and easy access when you’re on the go.

Just tap then “Add to Home Screen”

Monday 7 - Friday 11 August

09:00-12:30

Please see Timetable for full details.

Note: this course is linked strongly to the ”Program Evaluation and Impact Assessment” (SB106) course, as that course provides the basis for this one. However, the two courses can be taken up separately.

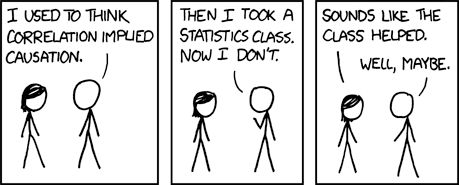

The course will introduce participants methods of causal inference in the social sciences. The objective is to learn how statistical methods can help us draw causal claims about phenomena of interest. By the end of the course, participants will be in position to 1) critically evaluate statements about causal relationships based on some analysis of data; 2) apply a variety of design-based easy-to-implement methods that will help them draw causal inferences in their own research.

One of the keys goals of empirical research is to test causal hypotheses. This task is notoriously difficult without the luxury of experimental data. This course will introduce you into methods that allow you to make convincing causal claims without working with experimental data. By the end of the course, you will know how to estimate causal effects using the following designs:

Matching

Instrumental Variables

Regression Discontinuity Design

Difference-in-Differences

You can only learn statistics by doing statistics. This is why this course includes a laboratory component, where you will learn to apply these techniques to the analysis of discipline specific data.

Dániel Horn is a research fellow at the Centre for Economic and Regional Studies of the Hungarian Academy of Sciences, and associate professor at the department of Economics, Eötvös Loránd University in Budapest.

Besides economics courses he has taught statistics, introduction to Stata and different public policy design and evaluation courses for over five years at PhD, MA and Bachelor levels.

He has been conducting educational impact assessment for over a decade. His research areas include education economics, social stratification and educational measurement issues.

Note: this course is linked strongly to the ”Program Evaluation and Impact Assessment” (SB106) course, as that course provides the basis for this one. However, the two courses can be taken up separately.

This one-week course provides a thorough introduction to the most widely used methods of causal inference. This is an applied methods course, with equal emphasis on methods and their applications.

Do hospitals make people healthier? Is it a problem that more people die in hospitals than in bars? Does an additional year of schooling increase future earnings? Do parties that enter the parliament enjoy vote gains in subsequent elections? The answers to these questions (and many others which affect our daily life) involve the identification and measurement of causal links: an old problem in philosophy and statistics. To address this problem we either use experiments or try to mimic them by collecting information on potential factors that may affect both treatment assignment and potential outcomes. Customary ways of doing this in the past entailed the specification of sophisticated versions of multivariate regressions. However, it is by now well understood that causality can only be dealt with during the design, not during the estimation process. The goal of this workshop is to familiarize participants with the logic of casual inference, the underlying theory behind it and introduce research methods that help us approach experimental benchmarks with observational data. Hence, this will be a much-applied course, which aims at providing participants with ideas for strong research designs in their own work and with the knowledge of how to derive and interpret causal estimates based on these designs.

We will start by discussing the fundamental problem of causal inference. After that, we will introduce the potential outcomes framework (dealt thoroughly within the Program Evaluation SB106 course). We will then illustrate how the selection problem creates bias in naïve estimators of such quantities using observational data and we will see how randomization solves the problem of selection bias. The next steps will be dedicated into the methods through which we can approach the experimental benchmark using observational data.

The first method we will examine is matching. Although matching is not itself a design of causal inference but a family of techniques to ensure balance on a series of observables (and is thus based on the conditional-on-observables assumption), it is very useful as a first application to the potential outcomes language. We will discuss the logic behind matching, its identification assumptions and we will see how it differs from standard regression methods.

After matching we will switch to the three designs. We will start with instrumental variables. We will motivate the discussion with the use of causal diagrams. We will then employ a running example which will help us to first unpack the identification assumptions upon which IVs can deliver unbiased causal estimates. We will then focus on estimation issues and on applications. We will see both the Wald estimator and its covariate extension, i.e. the 2SLS estimator. As a way of extension, we will also inform the compliers and introduce a flexible estimator, the Local Average Response Function (LARF). The LARF estimator will allow us to relax the constant treatment effects assumption when including covariates in both stages of the IV estimation.

The next design we will examine is the regression discontinuity design (RD). We will motivate the discussion with a series of examples from various subfields in sociology, political science and economics. These examples will help everyone grasp the intuition behind the design. Then we will move to the clarification of the assumptions upon which identification is based: under what assumptions does the RD generate unbiased causal estimators? Moreover, which causal quantity of interest is estimated? Once addressing this question, we will spend a lot of time explaining how exactly these effects can be estimated. We will cover both parametric and non-parametric estimation. We will discuss inference, using also robust confidence intervals for the point estimates. Special attention will be given to the procedure through which the bandwidth for the RD analysis is chosen. An important next step in this design is to discuss the plethora of robustness checks one needs to do when using the RD. Before moving to the lab applications, we will also look how the fuzzy RD operates. We will see the extra assumption needed for this design and we will look at examples to gauge the key intuition. Estimation with a fuzzy RD will be also discussed.

The last estimator we will focus on is the difference-in-differences estimator (DD). After explaining the logic of the method, we will see the key assumption needed to identify causal effects through the difference-in-differences estimator. We will then look at how you can estimate these effects, using a variety of designs, both with two groups and with multiple groups. We will then go back and discuss the parallel trends assumption in more detail, showing under what conditions one can examine whether it holds or not. We will also look at an extension of this design, namely the difference-in-differences-in-differences estimator. Numerous hands-on applications will be covered and one of them will be used as the main example for our applied session in the lab.

The lab sessions will draw on the discussion related to each of the three designs. IVs will be covered in STATA. The LARF estimator will be shown in R. In the RD session, we will look at an example of a sharp and one example of a fuzzy design. We will use both R and STATA for the local linear regression estimator. Polynomial estimation will be shown only in STATA. The bandwidth selection process will also be presented both in STATA and in R. Finally, the difference-in-differences estimator will be shown both in STATA and in R. It is worth emphasizing that this is not a software-intense course. I will not spend much time teaching you how STATA and R work in general. This is why full code will be provided so that we can focus on the analysis. That said, if you already use either of the two programs, after this course you will be in position to implement your analyses using any of the techniques that covered in the course.

Prerequisites

Knowledge of statistics at an undergraduate level, including regression analysis.

Some knowledge of Stata (or R)

Solid knowledge of statistics and regression analysis at an undergraduate level is a neccesity.

Participants are expected to be familiar with the OLS regression estimator. We will be working mainly in Stata. Full code will be provided. No perquisite knowledge of any specific software is requested, but some knowledge of Stata is useful.

| Day | Topic | Details |

|---|---|---|

| Monday | Session 1: Introduction to the Potential Outcomes Framework Session 2: Matching: Intuition, Estimation, Applications |

Lecture |

| Tuesday | Session 1: Matching Lab Session Session 2: Instrumental Variables: Identification & Estimation Wald Estimator, 2SLS Estimator, LARF Estimator) |

Session 1: Lab

Session 2: Lecture |

| Wednesday | Session 1: IV Lab Session 2: Regression Discontinuity Design (Motivation, Identification, Estimation Strategies) |

Session 1: Lab

Session 2: Lecture |

| Friday | Session 1: Dif-in-Dif Lab Session 2: Extensions, Discussion with participants on how they could use these methods in their own research. |

Session 1: Lab

Session 2: Lecture |

| Thursday | Session 1: RD Lab Session 2: Difference-in-Differences (Motivation, Identification, Estimation) |

Session 1: Lab

Session 2: Lecture |

| Monday | Session 1: Introduction to the Potential Outcomes Framework (recap of SB106) Session 2: Matching: Intuition, Estimation, Applications |

Lectures |

| Tuesday | Session 1: Matching Lab Session Session 2: Instrumental Variables: Identification & Estimation Wald Estimator, 2SLS Estimator, LARF Estimator) |

Session 1: Lab Session 2: Lecture |

| Wednesday | Session 1: IV Lab Session 2: Regression Discontinuity Design (Motivation, Identification, Estimation Strategies) |

Session 1: Lab Session 2: Lecture |

| Thursday | Session 1: RD Lab Session 2: Difference-in-Differences (Motivation, Identification, Estimation) |

Session 1: Lab Session 2: Lecture |

| Friday | Session 1: Dif-in-Dif Lab Session 2: Extensions, Discussion with participants on how they could use these methods in their own research. |

Session 1: Lab Session 2: Lecture |

| Day | Readings |

|---|---|

| Monday |

Angrist, Joshua and Jörn-Steffen Pischke. 2009. Mostly Harmless Econometrics: An Empiricist’s Companion, Princeton: Princeton University Press. Chapters 1 & 4.

Morgan Stephen L. and Christopher Winship. 2007. Counterfactuals and Causal Inference: Methods and Principles for Social Research, Cambridge: Cambridge University Press. Chapters 1, 2 & 7. |

| Tuesday |

Sovey, Allison & Donald Green. 2010. “Instrumental Variables Estimation in Political Science: A Readers’ Guide.” American Journal of Political Science, 55(1): 188-200. Imbens, Guido. 2014. Instrumental Variables: An Econometrician’s Perspective. NBER Working Paper # 19983. |

| Wednesday |

Angrist & Pischke Ch. 6

Lee, David. “Randomized Experiments from Non-Random Selection in U.S. House Elections.” Journal of Econometrics, 142: 675-97. Imbens, Guido W., and Thomas Lemieux. "Regression discontinuity designs: A guide to practice." Journal of econometrics 142.2 (2008): 615-635.

Eggers, Andrew C., and Jens Hainmueller. "MPs for sale? Returns to office in postwar British politics." American Political Science Review 103.04 (2009): 513-533.

Lee, David S., and Thomas Lemieux. Regression discontinuity designs in economics. No. w14723. National Bureau of Economic Research, 2009. |

| Thursday |

Angrist & Pischke Ch. 5

Bechtel, Michael & Jens Hainmueller. 2011. “How Lasting is Voter Gratitude? An Analysis of the Short- and Long-Term Electoral Returns to Beneficial Policy.” American Journal of Political Science, 55(4): 852-68. |

| Friday |

Homework:

If you have an idea and/or data where one of the methods can be applied, prepare a 5-minute presentation of how you think of implementing your analysis. Discuss potential threats and how you plan to address them.

If you do not have a project in mind, choose any of the readings from the section titled “Applications” and prepare a 5-minute presentation discussing the motivation of the paper and pointing to its identification strategy. |

| Monday |

Angrist, Joshua and Jörn-Steffen Pischke. 2009. Mostly Harmless Econometrics: An Empiricist’s Companion, Princeton: Princeton University Press. Chapters 1 & 4. Morgan Stephen L. and Christopher Winship. 2007. Counterfactuals and Causal Inference: Methods and Principles for Social Research, Cambridge: Cambridge University Press. Chapters 1, 2 & 7. |

| Tuesday |

Sovey, Allison & Donald Green. 2010. “Instrumental Variables Estimation in Political Science: A Readers’ Guide.” American Journal of Political Science, 55(1): 188-200. Imbens, Guido. 2014. Instrumental Variables: An Econometrician’s Perspective. NBER Working Paper # 19983. |

| Wednesday |

Angrist & Pischke Ch. 6 Imbens, Guido W., and Thomas Lemieux. "Regression discontinuity designs: A guide to practice." Journal of econometrics 142.2 (2008): 615-635. Lee, David S., and Thomas Lemieux. Regression discontinuity designs in economics. No. w14723. National Bureau of Economic Research, 2009. |

| Thursday |

Angrist & Pischke Ch. 5 |

| Friday |

Homework: If you have an idea and/or data where one of the methods can be applied, prepare a 5-minute presentation of how you think of implementing your analysis. Discuss potential threats and how you plan to address them. If you do not have a project in mind, choose any of the readings from the section titled “Applications” and prepare a 5-minute presentation discussing the motivation of the paper and pointing to its identification strategy. |

We will use extensively STATA throughout the course.

Participants may bring their own laptops but the course will be partially taught in a computer lab.

Theory

Impact Evaluation in Practice (World Bank, 2011)

Bound, John, David A. Jaeger, and Regina M. Baker. 1995. “Problems with Instrumental Variables Estimation When the Correlation Between the Instruments and the Endogeneous Explanatory Variable Is Weak.” Journal of the American Statistical Association 90(430):443–50.

Caliendo, Marco and Sabine Kopeinig. 2005. Some Practical Guidance for the Implementation of Propensity Score Matching. Institute for the Study of Labor (IZA).

Jacob, Robin, Pei Zhu, Marie-Andrée Somers, and Howard Bloom. 2012. “A Practical Guide to Regression Discontinuity.” mdrc. Retrieved March 5, 2017 (http://www.mdrc.org/publication/practical-guide-regression-discontinuity).

Applications

Matching

Angrist, Joshua D. and Victor Lavy. 2001. “Does Teacher Training Affect Pupil Learning? Evidence from Matched Comparisons in Jerusalem Public Schools.” Journal of Labor Economics 19(2):343–69.

Dolton, Peter and Jeffrey A. Smith. 2011. The Impact of the UK New Deal for Lone Parents on Benefit Receipt. Rochester, NY: Social Science Research Network.

IV

Angrist, J. D. and A. B. Krueger. 1991. “Does Compulsory School Attendance Affect Schooling and Earnings?” The Quarterly Journal of Economics 106(4):979–1014.

Angrist, Joshua D. 1998. “Estimating the Labor Market Impact of Voluntary Military Service Using Social Security Data on Military Applicants.” Econometrica 66(2):249–88.

Angrist, Joshua D. and Victor Lavy. 1999. “Using Maimonides’ Rule to Estimate the Effect of Class Size on Scholastic Achievement.” The Quarterly Journal of Economics 114(2):533–75.

RD

Lalive, Rafael. 2008. “How Do Extended Benefits Affect Unemployment Duration? A Regression Discontinuity Approach.” Journal of Econometrics 142(2):785–806.

Ludwig, Jens and Douglas L. Miller. 2007. “Does Head Start Improve Children’s Life Chances? Evidence from a Regression Discontinuity Design.” The Quarterly Journal of Economics 122(1):159–208.

DiD

Borjas, George J. 2015. The Wage Impact of the Marielitos: A Reappraisal. National Bureau of Economic Research.

Card, David. 1990. “The Impact of the Mariel Boatlift on the Miami Labor Market.” ILR Review 43(2):245–57.

Card, David and Alan B. Krueger. 1994. “Minimum Wages and Employment: A Case Study of the Fast-Food Industry in New Jersey and Pennsylvania.” American Economic Review 84(4):772–93.

Card, David and Alan B. Krueger. 2000. “Minimum Wages and Employment: A Case Study of the Fast-Food Industry in New Jersey and Pennsylvania: Reply.” American Economic Review 90(5):1397–1420.

Pischke, Jörn-Steffen. 2007. “The Impact of Length of the School Year on Student Performance and Earnings: Evidence From the German Short School Years*.” The Economic Journal 117(523):1216–42.

Summer School

Program Evaluation and Impact Assessment

Introduction to Stata

Multiple Regression Analysis: Estimation, Diagnostics, and Modelling